Extinction

Extinction is the process of getting rid of unwanted conditioned behaviour. In effect, it is a matter of unlearning something previously learned.

Operantly conditioned behaviour is maintained by reinforcement. Thus the key to getting rid of it lies in making sure that it does not get reinforced.

Remove the reinforcer – This is the direct and obvious solution.

e.g., if a child stands on his desk because this action is reinforced by attention, then the behaviour may be extinguished by ignoring (not reinforcing) the behaviour.

Satiation – a reinforcer may lose its effect if a person gets too much of it. This is called satiation.

e.g., if a large quantity of food is delivered for each peck at a disk, a pigeon may quickly stop pecking because it is satiated (full), or, if a teacher always says "Good work", students may stop responding to it. That is why the variety of social and activity reinforcers available, as mentioned in the section on reinforcement, is so important. You don't have to use the same words all the time. The English language provides us with many ways of saying essentially the same thing.

Classically conditioned behaviour is not

maintained by reinforcement so it is a waste of time to

look for a reinforcer to remove and thus eliminate the

behaviour. The key to eliminating classically conditioned

behaviour is to remove the unconditioned stimulus.

Gradually the conditioned stimulus will lose its power to

elicit the response. For example, if Pavlov's dogs heard

the bell rung, but never again experienced a squirt of

vinegar in the mouth at the time the bell was rung, the

conditioning would gradually wear off. That is, the dogs

would salivate less and less in response to the bell

until gradually they stopped salivating altogether when

presented with that stimulus.

Classically conditioned behaviour can be re-established

rather easily after it has apparently died out. One or

two new pairings of the sound of the bell and getting a

squirt of vinegar in the mouth would restore the

conditioning in Pavlov's dogs.

Phobias are common examples of classically conditioned

behaviour. Often some gradual approach, a type of

shaping, is used in overcoming a phobia. This approach is

called desensitization.

e.g., a child who is afraid of dogs is first exposed to a

dog at some distance which is tolerable to the child.

Over a period of time, the dog is brought closer until

the child is ready to touch and play with the dog.

Merely removing a reinforcer or an unconditioned stimulus may result in very slow extinction of a behaviour. In both operant and classical cases, extinction may be speeded up by reinforcing behaviour incompatible with the behaviour to be extinguished. Two behaviours are said to be incompatible if it is impossible (or at least very difficult) to perform one while performing the other -- laughing and crying are incompatible behaviours. For a child with a fear of dogs, besides the desensitization procedure described above, it would help to get the child involved in an enjoyable activity while in the presence of a dog. If the child is happy and laughing playing catch, it is very difficult for the child to be simultaneously cringing in fear and crying. Therefore, an adult could play with the child and make favourable comments about the game of catch, strengthening that behaviour.

Schedules of Reinforcement

The persistence of an operantly conditioned

behaviour is strongly affected by how reinforcement is delivered.

For instance, consider two people who both want to become

freelance writers selling articles to magazines. We will assume

that having an article published is reinforcing for these people.

Their experience with the first ten articles that they each

submit for publication is shown below. ('A' stands for 'Accepted

for publication' -- 'R' stands for 'Rejected').

Writer A |

Writer B |

A |

R |

A |

R |

A |

A |

R |

R |

R |

R |

R |

R |

R |

A |

R |

R |

R |

R |

R |

A |

It is likely that Writer A will be figuring that some early success was just a flash in the pan and that writing is not going to be a rewarding career. Writer B is likely to feel quite satisfied. No writer expects to have everything published. Writer B feels that this is simply the way it is in freelancing -- some get published and some don't.

You will notice that they both had three out of ten articles accepted for publication. They both had the same percentage of reinforcement. However, the patterns of reinforcement are different and we are not surprised that they come to different conclusions about the viability of their career choices. We can understand these different conclusions at an intuitive level, but behavioural psychologists explain them in terms of the patterns or schedules of reinforcement that the people have experienced.

We will examine five different schedules of reinforcement. It is possible to combine and mix these schedules in various ways but we will leave most of that to the experts.

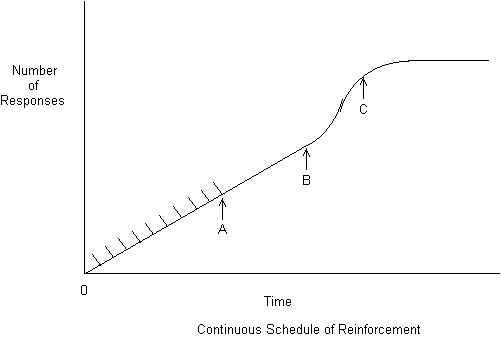

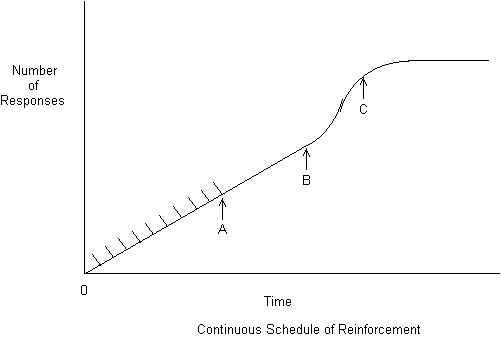

To help understand the schedules of reinforcement, we will use graphs. The graphs record the total number of responses made over time. Imagine a mechanical pen being moved at a constant rate from the left side of the page toward the right. Each time a response is emitted, the pen moves another step toward the top of the page. Each time a reinforcement is delivered, a short diagonal stroke is drawn on the upper left side of the line.

The graphs reflect the prototypical patterns of behaviour that are produced on various schedules of reinforcement. The graphs reflect overall tendencies, not the behaviour of any particular individual person or animal. Actual graphs from experiments would be much more wiggly than those presented below. I have smoothed out the graphs to make the main features easier to see.

Continuous Reinforcement (CR)

Every response is followed by a reinforcer. If a pigeon pecking a key gets fed after every peck, pecking is being continuously reinforced – there is a 1:1 ratio between correct responses and reinforcements. A similar situation exists with a properly-functioning coke machine. Every correct response (putting in coins) gets a reinforcer (a can of coke comes out).

The following graph shows typical characteristics of continuously reinforced behaviour. The passage of time is shown in the horizontal direction. The total number of responses produced is shown in the vertical direction. The short marks above and to the left of the line indicate reinforcements.

Note that at time A the last reinforcer is given. For a short time after that (from A to B) responding continues without reinforcement. Then there is usually an upsurge in responding (from B to C). This is often accompanied by strong emotion. e.g., suppose that a coke machine, which has always reinforced you in the past, takes your money today but doesn't give you a coke. You may quickly "try again", i.e., emit another coin-insertion response. If it fails to reinforce you again, you may or may not try a third time, but you are very likely to be feeling angry at the machine and may even kick it or inflict verbal abuse upon it. You are then in the B to C region of the graph.

After time C, the end of the relatively brief

upsurge in responding, the rate of responding rapidly drops off

to zero. Then the behaviour has been extinguished.

In general, behaviour maintained by continuous reinforcement:

is slow but steady

increases in rate after reinforcement ceases (often accompanied by an emotional reaction)

dies out quickly without further reinforcement, in fact, continuously reinforced behaviour is the easiest kind to extinguish.

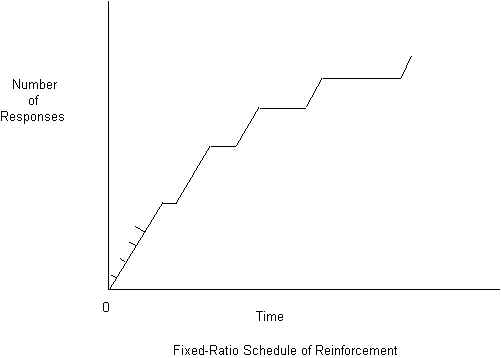

Fixed-Ratio Reinforcement (FR)

Instead of reinforcing every correct response as in CR, reinforcement is given after some fixed number of correct responses, say 5 or 10. This is the essential idea behind piecework – so many units of production (responses) for a unit of reward (reinforcer).

In a laboratory demonstration of fixed-ratio reinforcement, after, say, a pigeon pecked a target 6 times it would be fed. A reinforcer would be delivered immediately upon completion of the series of responses. In a factory operated on a piecework basis, delivery of the reinforcer is delayed until payday, but the schedule of reinforcement is still essentially fixed-ratio.

Responding will continue much longer after the

last reinforcer is delivered in the FR case than the CR case.

Finally a pause comes, then responding resumes at the original

high rate, then a longer pause comes, then a shorter period of

high-rate responding and so on. This is an idealized

representation of the general trend. The overall tendency is for

the periods of responding to become shorter and the pauses to

become longer, but in a particular case there may be instances

when some response periods are longer than preceding ones or some

pauses shorter than those that have gone before. Similar comments

could be made about the graphs for VR and FI schedules of

reinforcement.

Responding on the FR schedule:

is at a high rate (this schedule produces the highest response rate)

is moderately resistant to extinction

Extremely high ratios can be attained requiring more than 100 responses to earn one reinforcer.

Variable-Ratio Reinforcement (VR)

This schedule is similar to FR. However, a reinforcer is delivered not after exactly, say 10, responses but in a random manner such that the average number is 10, e.g., first 2, then 8, then 15, then 11, …

The rate of responding is a little lower than FR but, although

the graph here cannot give an accurate picture due to space

limitations, behaviour maintained on a VR schedule takes much

longer to extinguish than behaviour maintained on an FR schedule.

A slot machine is a perfect place to find behaviour maintained by

VR reinforcement.

Responding on a VR schedule is:

Fixed-Interval Reinforcement (FI)

Interval schedules are based on the passing of time, not the number of responses. After the required time has elapsed, the first desired response emitted earns a reinforcer.

In the FI case, the interval is fixed, i.e., each interval is of the same length, say 45 seconds. A problem with the FI schedule is that animals catch on to it quickly and simply wait for the time to elapse, give the minimum response, then collect the reinforcer. This detective ability is shared by rats, pigeons, chimpanzees, small children, and even, it is rumoured, many adults. The phenomenon is well described in non-psychological terms as goofing off until the deadline gets close. This assumes that the interval between reinforcements is longer than that needed to perform the required task. This produces an interesting graph.

After each reinforcement, responding all but stops entirely

until time for the next reinforcement draws near. After the last

reinforcement there is a quite rapid extinction as the

"scallops" in the graph become longer and more shallow.

Responding on an FI schedule is:

Variable-Interval Reinforcement (VI)

As with FI reinforcement, the first response emitted after a certain time has elapsed gets a reinforcer. As suggested by the word "variable", the time intervals are of different lengths.

Unlike responding on other schedules of reinforcement, on the VI schedule there are no sharp breaks. Responding continues long after the last reinforcer is given and very gradually reduces in rate.

Responding on a VI schedule is:

In practical situations it may be very difficult to distinguish between VR and VI schedules. In some cases, asking a boss for a raise might be reinforced on a VI schedule. Certainly some time must elapse between one raise and the next. Just how much time may be a variable determined by the state of the boss's health, the state of the business's health, and other imponderables. Sometimes asking produces a raise and sometimes it doesn't. For the employee it would be difficult to determine whether his asking behaviour is being reinforced on a VI or VR schedule. However, the results are going to be much the same. A fairly high rate of requesting will develop and requesting will persist for a long time even if no further raises are forthcoming.

Schedules of reinforcement may be used to guide learning in school. A new behaviour should be reinforced on a CR schedule to get it off to a good start. Then a change can be made to FR. Then the FR ratio may be "stretched", e.g., 2:1 --> 5:1 --> 10:1. Then a change can be made to a variable schedule to produce lasting behaviour – very difficult to extinguish.

A convenient characteristic of people is that they find reinforcement in the performance of many activities. Thus, it is often not necessary to provide external reinforcers. External reinforcement may be important to get a behaviour started, but we hope more natural reinforcers will take over after a person gains a basic competence.

NOTE: You may have noticed that the CR schedule is just a special FR schedule (1:1). While mathematically that is true, they produce decidedly different behaviour patterns so we will treat them as completely distinct.

Indicate the schedule of reinforcement operating in each of the following (CR, FR, VR, FI or VI):

Return to the Course 3070 Notes Page

Return to the Course 3070 Home Page